0. Why do large companies hire data analysts these days?

Large companies hire data analysts because they have a lot of data to analyze in order to make informed business decisions based on information.

Data analysts use various tools and technologies to analyze this data and provide insights that help companies improve operations, increase profits, and maintain competitiveness in the market.

With the spread of big data, the demand for data analysts has significantly increased in recent years and is expected to continue to grow in the future.

1. What do you need to study to become a data analyst?

To become a data analyst, you need to study a combination of computer science, statistics, and business.

You need a strong foundation in computer programming languages like Python and SQL, as well as experience with statistical analysis software like R or SAS. Additionally, you need to have a good understanding of business principles and the ability to effectively communicate with non-technical stakeholders.

Continuously honing your skills in machine learning and data visualization is also essential to increase your competitiveness in the job market.

2. What are the types of data that data analysts mainly analyze in companies, internal data, and web data?

Data analysts typically use internal company data and web data to support organizations in making informed decisions.

This may involve collecting, organizing, and structuring large datasets, using statistical and analytical methods to identify trends and patterns.

Data analysts also use tools like spreadsheets and databases to store and manipulate data, as well as create reports and visualizations to communicate results to other people within the organization.

The goal of a data analyst is to help organizations improve operations and achieve goals using data.

3. What skills do you need to learn to collect external data as a data analyst?

As a data analyst, you need to learn various skills to collect external data. Key skills to learn include web scraping, API integration, and SQL.

Web scraping involves extracting data from websites using specialized software. This can be useful for collecting data from websites that do not provide other means of access through APIs or direct data access.

API integration involves using Application Programming Interfaces (APIs) to access data from websites or other online services in a systematic and programmatic way. Many websites and services provide APIs for developers to access data in a structured manner.

SQL (Structured Query Language) is a programming language used to manage and manipulate data stored in databases. To access and analyze data stored in databases as a data analyst, you need to be proficient in SQL.

In addition to these technical skills, strong problem-solving and communication skills are necessary to effectively collect and interpret external data.

4. How much is the salary of a data analyst with excellent data collection skills?

The exact salary of a data analyst with excellent data collection skills can vary depending on specific roles, industries, locations, experience levels, specific skills, and other factors, making it difficult to determine an exact salary.

According to data from the Bureau of Labor Statistics, the average salary for occupations in data analysis, including data analysts, was $84,810 in May 2019. However, this figure may not reflect the salaries of top data analysts. Generally, data analysts with excellent data collection skills and strong performance in their field may receive higher salaries compared to those with more common skills and experience.

It can be observed that data analysts or data scientists receive relatively higher salaries compared to similar occupations.

5. What is the most challenging part of web crawling (scraping)?

As a data analyst, having the ability to collect data is essential.

One of the most challenging aspects of web scraping is dealing with constantly changing web page structures. Websites are often updated and redesigned, leading to changes in page structures. Web scraping scripts are designed to extract data from specific elements on web pages based on the structure, so changes in structure can break web scraping scripts. As a result, web scraping scripts need to be frequently updated and maintained to continue functioning properly.

Another challenge of web scraping is dealing with websites that attempt to prevent scraping. Some websites use technologies like security measures and rate limiting to prevent scrapers from extracting data from their pages. This can make it difficult to collect data from such websites, and may require the use of advanced web scraping technologies like proxies and headless browsers.

Overall, web scraping can be a challenging and time-consuming task, but it is an important skill for data analysts to collect data from various sources, making it an essential skill for data analysts.

6. How to scrape a large amount of data from the web

To scrape a large amount of data from the web, you need to combine multiple technologies to make the scraping process efficient and scalable. Some key considerations when scraping a large amount of data include:

Use a distributed scraping architecture: Instead of running a single scraper on a single system, use a distributed architecture where multiple scrapers can run in parallel on multiple systems. This allows you to scale scraping efforts and collect data more quickly.

Use caching and queuing: When scraping a large amount of data, using caching and queuing to store and manage the collected data can be useful. Caching allows you to temporarily store data so you don't have to scrape the same data multiple times, and queuing allows you to prioritize which pages to scrape and when, making the scraping process more efficient.

Use headless browsers: Headless browsers are web browsers that run without a user interface. They can execute JavaScript and render pages like a regular web browser, making them useful for scraping websites that use JavaScript to render content. This can make it easier to scrape websites with complex JavaScript-based structures.

Use proxies: Using proxies allows you to route web scraping traffic through multiple IP addresses, helping to prevent detection and blocking by websites attempting to prevent scraping. This can be useful for scraping data from websites with strict scraping policies.

7. Web Scraping Specialist Company Hashscraper

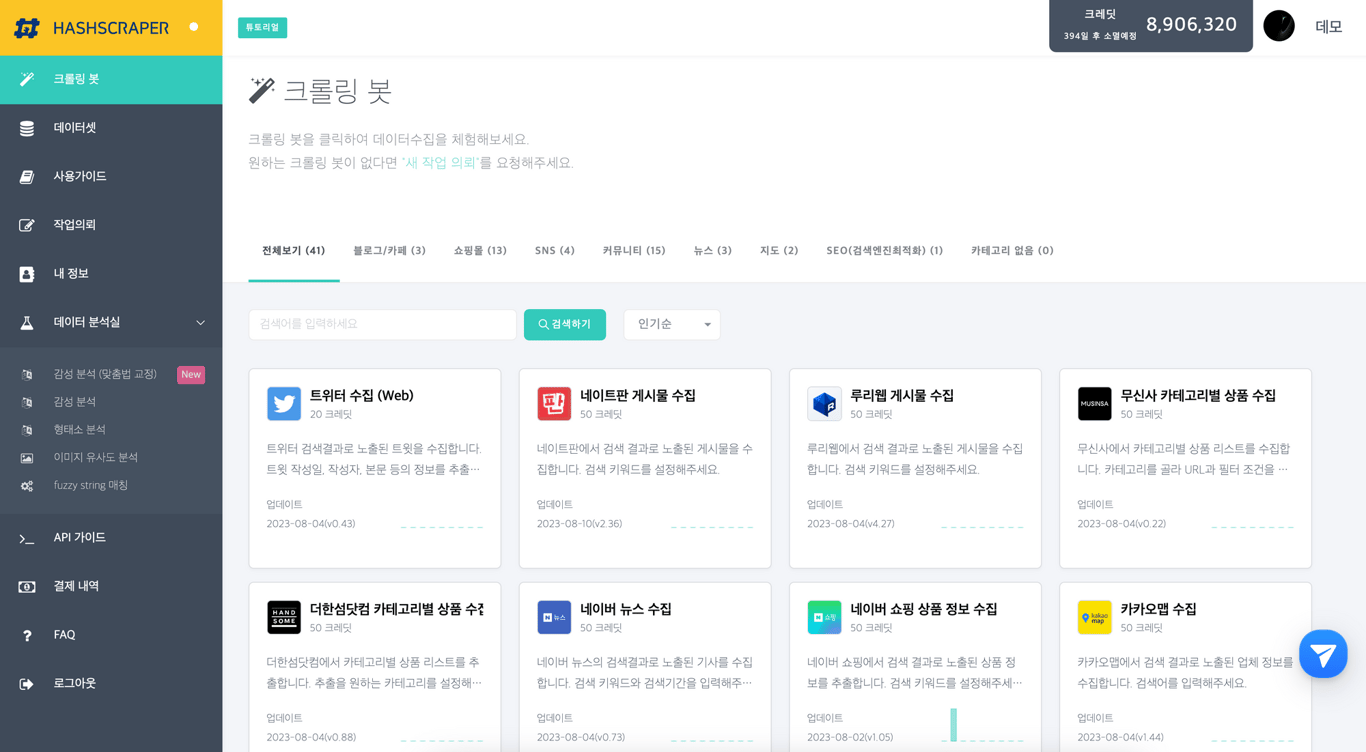

Hashscraper Data Collection Dashboard (Can collect data from SNS, communities, e-commerce, etc.)

Hashscraper is a company specializing in web crawling that possesses all the necessary technologies to scrape massive amounts of data from the internet.

Hashscraper has a team of skilled data analysts and developers who are experts in web crawling. They use advanced algorithms and sophisticated software to crawl the web and collect data on a large scale. This allows them to collect a large amount of data quickly and efficiently without manually visiting each website.

The company's web scraping technology can handle even the largest and most complex datasets. They can extract data from websites with complex layouts and structures, as well as from sites that require authentication or use CAPTCHA to prevent scraping.

In addition to web crawling, Hashscraper offers various other services that help companies understand their data. These include data cleaning and preprocessing, data visualization, and statistical analysis. The company's team of experts can help organizations identify trends and patterns in data and make informed decisions based on this information.

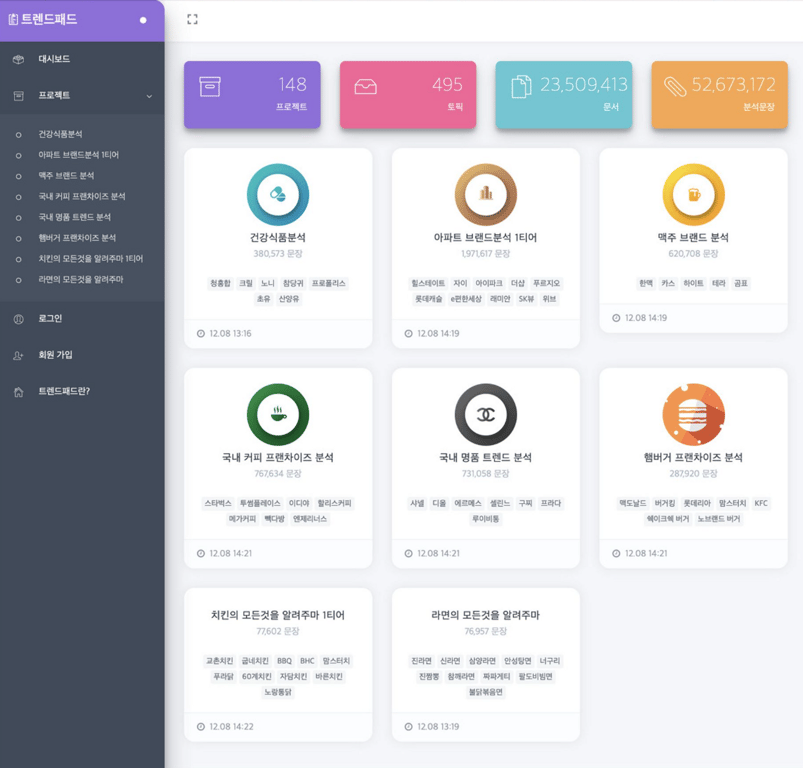

Real-time Trend Analysis Service - TrendPad

Check out this article as well:

Automate Data Collection Now

Start in 5 minutes without coding · Experience crawling over 5,000 websites