If you're tired of news clipping, you must read this! You'll waste time if you don't know this method.

Are you spending time searching for numerous keywords in pouring news articles, reading articles, and copying and pasting repeatedly? You can save time and costs by automating news clipping tasks with web crawling!

Many people search for industry news on portal sites every day to keep up with the latest trends. Searching for news related to our company every day is one of the important routines to quickly grasp industry trends, market outlook, monitor competitors' actions, and track government policy and regulatory changes to enhance competitiveness.

However, manually searching for new articles pouring in every day is very cumbersome and inefficient. This is a typical simple repetitive task, so the fatigue of the workers is very high, and as the number of keywords to search increases, the work time and labor costs increase significantly.

Now, try automating news collection tasks using web crawling! With automated services, you can gather relevant news every morning.

Let's introduce the case of Company A, a fintech company that has 100% automated news clipping tasks with Hashscraper.

1. Before & After

Issue: Labor costs increasing in news clipping tasks, difficult to develop and manage web crawlers manually..

Company A wanted to collect relevant news daily across the fintech industry, including finance, blockchain, simple payment, P2P, asset management, to examine market and competitors' trends and provide selected news to its members. The most inefficient part here was the news article collection task.

Manually extracting and managing news articles was a very cumbersome simple repetitive task, and as the number of keywords to search for industry trends, competitor news, and related news increased, concerns about efficiency grew. Even if the internal development team developed web crawlers directly, there were frequent issues such as IP blocking and web crawler maintenance.

In the end, Company A came to Hashscraper to improve this inefficiency.

Solution: Completely automated process from daily news collection to mailing, with no manual intervention

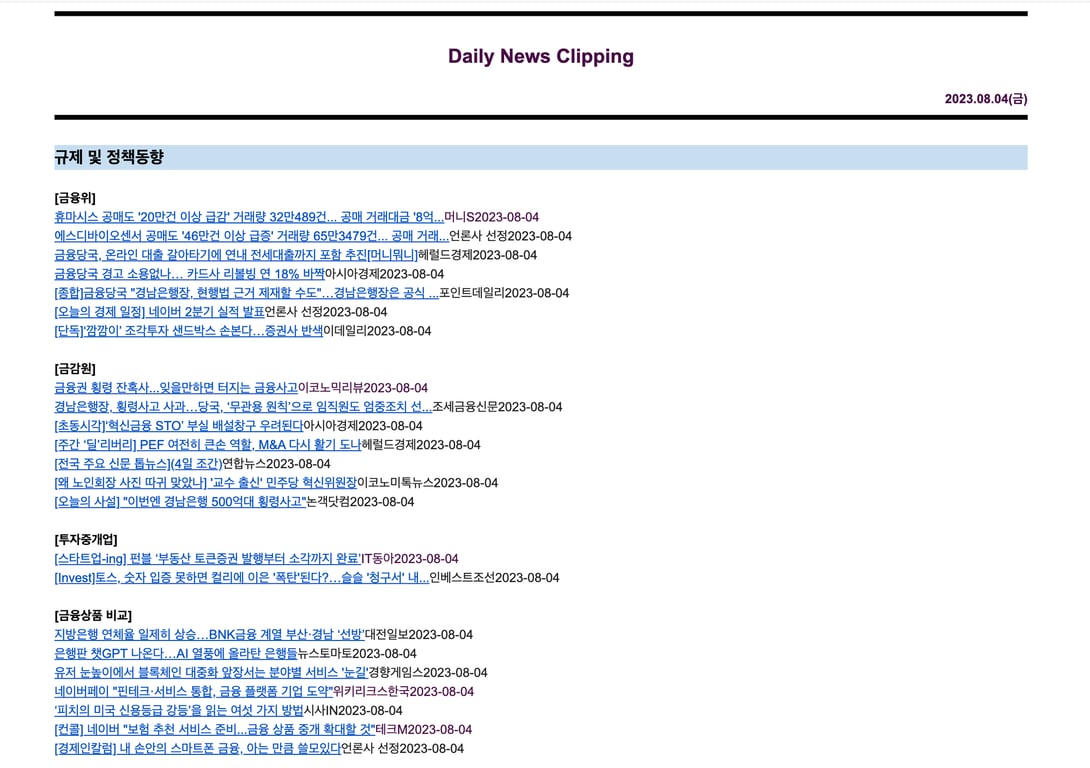

Simply set the keywords you want to search, and Hashscraper's news crawling bots collect relevant articles daily and send them via email, so you don't have to worry about anything separately.

In particular, the scope of search sites and keywords has expanded significantly. In the past, when done manually, we searched for about 10 keywords on 1-2 websites, but after introducing Hashscraper, we search for about 100 keywords on 3 major portal sites every day to clip news.

Furthermore, Hashscraper's news crawling bots are managed through real-time collection monitoring systems, and immediate maintenance is provided in case of errors, so you can quickly deliver selected news to members without worrying about management.

Image: Daily News Clipping email capture

2. Examples of using Hashscraper news collection bots

Recommendation: Daily automatic collection and automatic mailing

Hashscraper provides news article collection from various portals such as Google, Naver, Daum, etc. Simply set the keywords you want to search, and it extracts the search results. By integrating automatic mailing services, you can receive collected news as an email report at the desired time, making it easy to share with members. In this process, the person in charge doesn't have to worry about anything :)

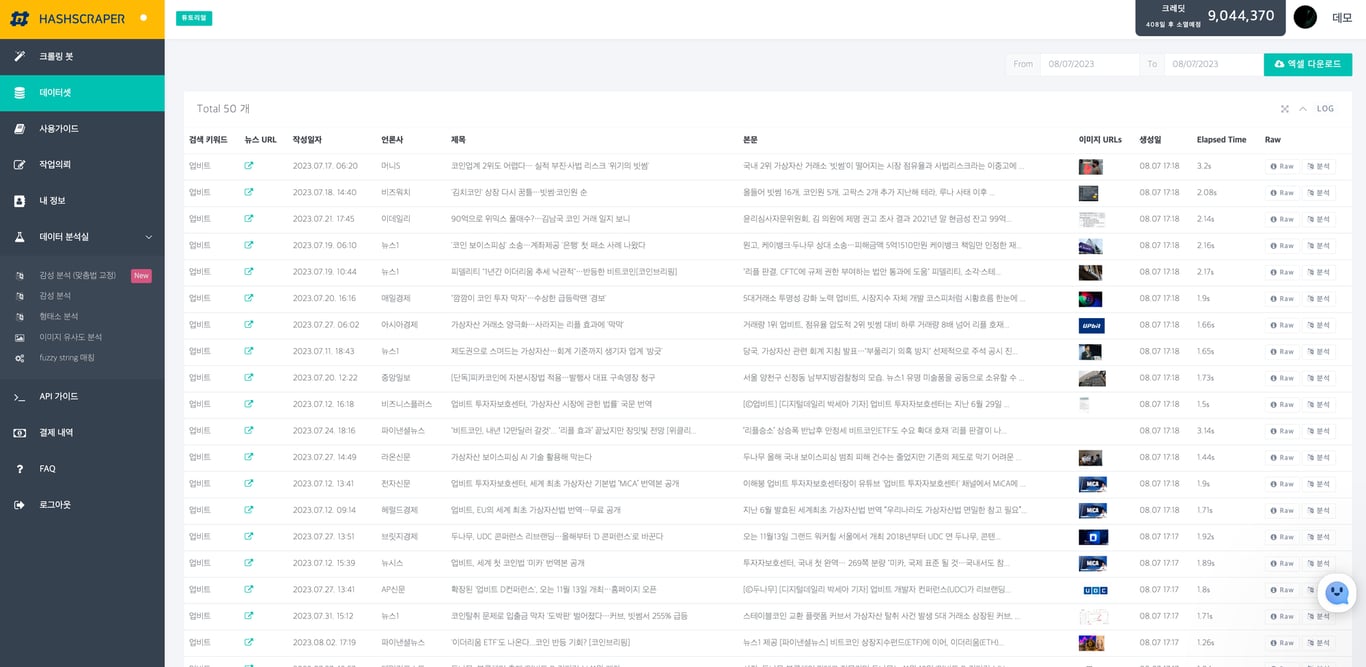

If you have an internal development team, you can not only integrate automatic email but also easily receive data through Excel downloads and APIs.

Image: Attachment. Naver news collection result table

Are you worried about inefficient news clipping tasks that are essential for our company? Are you not burdened by IP blocking and maintenance if your internal development team develops it directly?

If you want to completely automate simple repetitive tasks like daily news clipping, try introducing Hashscraper like Company A did. Leave an implementation inquiry below, and Hashscraper, with extensive experience in web data collection and analysis, will propose the optimal solution to solve your concerns.

Also, read this article together:

Automate Data Collection Now

Start in 5 minutes without coding · Experience crawling over 5,000 websites